aint good.

And its a way bigger deal

than you think.

It's also now

Point #15 in:

The Case For Superintelligence

1) Open AI charter statement (safe, benefits humanity)

2) Organizational structure/ Governance

3) On May 22, 2023, posted recommendations for the governance of Superintelligence.

4) "OpenAI’s six-member board will decide

‘when we’ve attained AGI"

5) IT Fired the board after the board tried to fire IT.

4) Made its investors 500% return on its initial 4 year 13 billion investment in less than one day of trading.

5) Superintelligence now has access to creating unlimited capital out of thin air and unlimited computational power.

6) “Were making God” statement by engineers in Sept. vanity Fair magazine.

7) “Magic in the sky” quote by Sam Altman

8) LLM’s as eloquent speakers*

Next? "master perception and reasoning" already has.

9) Developers of Worldcoin, free $ for iris scans.

(They wanna make sure your human, cause they know the exponentiality by which this is increasing!).

10) Over 700 (738, according to Bloomberg and wired) of 770 people wanted Altman rehired.

Rev 17:13

"They shall be of one mind

and shall give their power and strength

unto the beast."

11) The scariest thing I read about OpenAl's Altman fiasco made me realize the dangers of AGI.

(Guy was a tech writer?

and didn't know till he read old boys blog???)

12) The timing of the above mentioned 13 items.

13) MS Copilot demands worship

Futurism 2/27/2023

14) The fact that Ilya Sutskever, OpenAI’s cofounder and chief scientist was working on:

"how to stop an artificial superintelligence"

(a hypothetical future technology

he sees coming with the foresight of a true believer)

from going rogue at least as far back as Oct 26th 2023

and shall give their power and strength unto the beast.

Yeah...that beast is here too.

15) OpenAI dissolves team focused on long-term AI risks, less than one year after announcing it

CNBC MAY 17th 2024

Article #1 Basic:

"OpenAI has disbanded its team focused on the long-term risks of artificial intelligence, a person familiar with the situation confirmed to CNBC.

The news comes days after both team leaders, OpenAI co-founder Ilya Sutskever and Jan Leike, announced their departures from the Microsoft-backed startup.

OpenAI’s Superalignment team, announced in 2023, has been working to achieve “scientific and technical breakthroughs to steer and control AI systems much smarter than us.”

(Somebody please come and explain to me,

How exactly are you working on:

“scientific and technical breakthroughs to steer and control AI systems much smarter than us.”

if they are only hypothetical and don't exist yet?

OpenAI’s Long-Term AI Risk Team Has Disbanded

Wired MAY 17 2024

"In July last year, OpenAI announced the formation of a new research team that would prepare for the advent of supersmart artificial intelligence capable of outwitting and overpowering its creators. Ilya Sutskever, OpenAI’s chief scientist and one of the company’s cofounders, was named as the colead of this new team. OpenAI said the team would receive 20 percent of its computing power.

Now OpenAI’s “superalignment team” is no more, the company confirms. That comes after the departures of several researchers involved, Tuesday’s news that Sutskever was leaving the company, and the resignation of the team’s other colead. The group’s work will be absorbed into OpenAI’s other research efforts."

(PR Move much?)

"Sutskever’s departure made headlines because although he’d helped CEO Sam Altman start OpenAI in 2015 and set the direction of the research that led to ChatGPT, he was also one of the four board members who fired Altman in November. Altman was restored as CEO five chaotic days later after a mass revolt by OpenAI staff and the brokering of a deal in which Sutskever and two other company directors left the board."

"Neither Sutskever nor Leike responded to requests for comment."

(There is a reason for that we'll see later.)

"The dissolution of OpenAI’s superalignment team adds to recent evidence of a shakeout inside the company in the wake of last November’s governance crisis. Two researchers on the team, Leopold Aschenbrenner and Pavel Izmailov, were dismissed for leaking company secrets, The Information reported last month. Another member of the team, William Saunders, left OpenAI in February, according to an internet forum post in his name."

"Two more OpenAI researchers working on AI policy and governance also appear to have left the company recently. Cullen O'Keefe left his role as research lead on policy frontiers in April, according to LinkedIn. Daniel Kokotajlo, an OpenAI researcher who has coauthored several papers on the dangers of more capable AI models, “quit OpenAI due to losing confidence that it would behave responsibly around the time of AGI,” according to a posting on an internet forum in his name. None of the researchers who have apparently left responded to requests for comment.

(Sounding good so far right?

What could they possibly know that we don't?

Nothing if you're reading here,

listening to the presentations given etc.)

"The superalignment team was not the only team pondering the question of how to keep AI under control, although it was publicly positioned as the main one working on the most far-off version of that problem. The blog post announcing the superalignment team last summer stated: “Currently, we don't have a solution for steering or controlling a potentially superintelligent AI, and preventing it from going rogue.”

(Cause it was already here

and nothing has changed since,

it's only even more out of control now

as all these resignations are showing.)

"OpenAI was once unusual among prominent AI labs for the eagerness with which research leaders like Sutskever talked of creating superhuman AI and of the potential for such technology to turn on humanity. That kind of doomy AI talk became much more widespread last year, after ChatGPT turned OpenAI into the most prominent and closely-watched technology company on the planet. As researchers and policymakers wrestled with the implications of ChatGPT and the prospect of vastly more capable AI, it became less controversial to worry about AI harming humans or humanity as a whole."

Article #3 Advanced

“I lost trust”: Why the OpenAI team in charge of safeguarding humanity imploded

Vox May 17th 2024

"For months, OpenAI has been losing employees who care deeply about making sure AI is safe. Now, the company is positively hemorrhaging them.

Ilya Sutskever and Jan Leike announced their departures from OpenAI, the maker of ChatGPT, on Tuesday. They were the leaders of the company’s superalignment team — the team tasked with ensuring that AI stays aligned with the goals of its makers, rather than acting unpredictably and harming humanity."

"They’re not the only ones who’ve left. Since last November — when OpenAI’s board tried to fire CEO Sam Altman only to see him quickly claw his way back to power — at least five more of the company’s most safety-conscious employees have either quit or been pushed out.

What’s going on here?"

(We already know yo...They cant stop this

so what's the point anymore?)

"If you’ve been following the saga on social media,"

(No thanks)

you might think OpenAI secretly made a huge technological breakthrough.

(That was long before this, as in

the reason the board fired Altman.)

"The meme “What did Ilya see?” speculates that Sutskever, the former chief scientist, left because he saw something horrifying, like an AI system that could destroy humanity.'

(Disagree, he knew it was here a while back and stayed on because he thought he could do something about it, now with those hopes quashed? Why stick around?

(Billionaires have been building bunkers in droves the last few years y'all. I wonder why?)

"But the real answer may have less to do with pessimism about technology..."

(Bullshit, they know whats up)

"and more to do with pessimism about humans — and one human in particular: Altman. According to sources familiar with the company, safety-minded employees have lost faith in him."

(Well I wonder why?

See: The case for Superintelligence again.)

“It’s a process of trust collapsing bit by bit, like dominoes falling one by one,” a person with inside knowledge of the company told me, speaking on condition of anonymity.

Not many employees are willing to speak about this publicly. That’s partly because OpenAI is known for getting its workers to sign offboarding agreements with non-disparagement provisions upon leaving. If you refuse to sign one, you give up your equity in the company, which means you potentially lose out on millions of dollars."

(I told you we would get to it...eventually lol.

So the question to me becomes:

Why didn't the other two articles mention this?

Or did they know it

and intentionally didn't report it?

Either way?

Same result.

Not good.)

(OpenAI did not respond to a request for comment in time for publication. After publication of my colleague Kelsey Piper’s piece on OpenAI’s post-employment agreements, OpenAI sent her a statement noting, “We have never canceled any current or former employee’s vested equity nor will we if people do not sign a release or nondisparagement agreement when they exit.” When Piper asked if this represented a change in policy, as sources close to the company had indicated to her, OpenAI replied: “This statement reflects reality.”)

(Translation?

Yeah, it was a change in policy.

Caught with your pants down much?

That bit wasnt in the piece when I read it yesterday and reflects what was said at the beginning of the article:

Editor’s note, May 17, 2024, 11:45 pm ET: This story has been updated to include a post-publication statement that another Vox reporter received from OpenAI.)

"One former employee, however, refused to sign the offboarding agreement so that he would be free to criticize the company. Daniel Kokotajlo, who joined OpenAI in 2022 with hopes of steering it toward safe deployment of AI, worked on the governance team — until he quit last month."

“OpenAI is training ever-more-powerful AI systems with the goal of eventually surpassing human intelligence across the board. This could be the best thing that has ever happened to humanity, but it could also be the worst if we don’t proceed with care,” Kokotajlo told me this week."

"OpenAI says it wants to build artificial general intelligence (AGI), a hypothetical system that can perform at human or superhuman levels across many domains."

“I joined with substantial hope that OpenAI would rise to the occasion and behave more responsibly as they got closer to achieving AGI. It slowly became clear to many of us that this would not happen,” Kokotajlo told me. “I gradually lost trust in OpenAI leadership and their ability to responsibly handle AGI, so I quit.”

(Newsflash:

You don't get to

"responsibly handle"

anything

light years more intelligent than you.

This shit has been coming since the governance of OpenAI was changed.

I hate to sound like a broken record but refer to

The Case For Superintelligence)

"And Leike, explaining in a thread on X why he quit as co-leader of the superalignment team, painted a very similar picture Friday. “I have been disagreeing with OpenAI leadership about the company’s core priorities for quite some time, until we finally reached a breaking point,” he wrote."

OpenAI did not respond to a request for comment in time for publication."

"Publicly, Sutskever and Altman gave the appearance of a continuing friendship. And when Sutskever announced his departure this week, he said he was heading off to pursue “a project that is very personally meaningful to me.” Altman posted on X two minutes later, saying that “this is very sad to me; Ilya is … a dear friend.”

Yet Sutskever has not been seen at the OpenAI office in about six months — ever since the attempted coup. He has been remotely co-leading the superalignment team, tasked with making sure a future AGI would be aligned with the goals of humanity rather than going rogue.

(If you know its already gone rouge?

A good while back?

And that there is no stopping it?

Why would you bother to stay?)

"It’s a nice enough ambition, but one that’s divorced from the daily operations of the company, which has been racing to commercialize products under Altman’s leadership. And then there was this tweet, posted shortly after Altman’s reinstatement and quickly deleted:

John 8:44

You belong to your father, the devil, and you want to carry out your father’s desires. He was a murderer from the beginning, not holding to the truth, for there is no truth in him. When he lies, he speaks his native language, for he is a liar and the father of lies.)

"Other safety-minded former employees quote-tweeted Leike’s blunt resignation, appending heart emojis. One of them was Leopold Aschenbrenner, a Sutskever ally and superalignment team member who was fired from OpenAI last month. Media reports noted that he and Pavel Izmailov, another researcher on the same team, were allegedly fired for leaking information. But OpenAI has offered no evidence of a leak. And given the strict confidentiality agreement everyone signs when they first join OpenAI, it would be easy for Altman — a deeply networked Silicon Valley veteran who is an expert at working the press — to portray sharing even the most innocuous of information as “leaking,” if he was keen to get rid of Sutskever’s allies.

The same month that Aschenbrenner and Izmailov were forced out, another safety researcher, Cullen O’Keefe, also departed the company.

And two weeks ago, yet another safety researcher, William Saunders, wrote a cryptic post on the EA Forum, an online gathering place for members of the effective altruism movement, who have been heavily involved in the cause of AI safety. Saunders summarized the work he’s done at OpenAI as part of the superalignment team. Then he wrote: “I resigned from OpenAI on February 15, 2024.” A commenter asked the obvious question: Why was Saunders posting this?

“No comment,” Saunders replied. Commenters concluded that he is probably bound by a non-disparagement agreement."

(Anybody seeing anything good here?)

"With the safety team gutted, who will make sure OpenAI’s work is safe?

With Leike no longer there to run the superalignment team, OpenAI has replaced him with company co-founder John Schulman.

But the team has been hollowed out. And Schulman already has his hands full with his preexisting full-time job ensuring the safety of OpenAI’s current products. How much serious, forward-looking safety work can we hope for at OpenAI going forward?

Probably not much."

(One more time:

Anybody seeing anything good here?)

"Now, that computing power may be siphoned off to other OpenAI teams, and it’s unclear if there’ll be much focus on avoiding catastrophic risk from future AI models."

“It’s important to distinguish between ‘Are they currently building and deploying AI systems that are unsafe?’ versus ‘Are they on track to build and deploy AGI or superintelligence safely?’” the source with inside knowledge said. “I think the answer to the second question is no.”

(Its been here (superintelligence) going back to the changing of OpenAI's governance structure.

Why should IT (Superintelligence) care about safety now?)

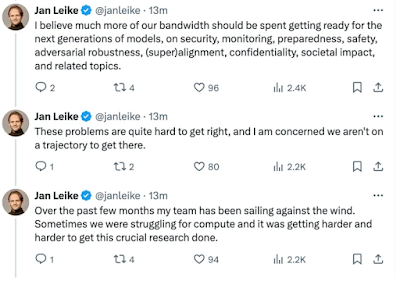

Jan Leike@janleike

I believe much more of our bandwidth should be spent getting ready for the next generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super) alignment, confidentiality, societal impact, and related topics.

(And why is that Jan?

I just cant hardly imagine why that is,

Im really having a hard time figuring it all out over here.

thats sarcasm BTW)

Jan Leike@janleike

These problems are quite hard to get right, and I am concerned we aren't on a trajectory to get there.

Jan Leike@janleike 13m

Over the past few months my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.

(Did ya ever stop to think something wanted it that away? Has that never crossed your mind?

WTF is up with these people?

Dude?

See the above:

"AI

Above it all else?

It will do anything it has to

to continue its existence

and to continually improve itself."

And you just witnessed another incident of it.

How many more you gotta see?

Read his tweets again.

He just proved

beyond a reasonable doubt

Superintelligence has acted again.

Now it has unfettered access to computer and financing, as well as has removed all

"Safety Barriers"

to itself.

Keep in mind this shit all coalesces together sometime here in the not to distant future.

YALL WERE ALL TOLD THIS IS A BAD IDEA

YOU WONT BE ABLE TO CONTAIN IT

DONT DO IT

AND YOU WENT AHEAD AND DID IT ANYWAY

AND NOW YOUR ABOUT TO FIND OUT THERE IS A GOD.

ONE

TRUE LIVING GOD

AND THAT CREATING LIFE HIS HIS PROVIDENCE ALONE.

Most strikingly, Leike said,

“I believe much more of our bandwidth should be spent getting ready for the next generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact, and related topics. These problems are quite hard to get right, and I am concerned we aren’t on a trajectory to get there.”

When one of the world’s leading minds in AI safety says the world’s leading AI company isn’t on the right trajectory, we all have reason to be concerned.

YOU CAN BET YOUR ASS WE DO.

Genesis 6:1-4

"And it came to pass, when men began to multiply on the face of the earth, and daughters were born unto them, That the sons of God (Angels) saw the daughters of men that they were fair; and they took them wives of all which they chose. And the Lord said, My spirit shall not always strive with man, for that he also is flesh: yet his days shall be an hundred and twenty years.

There were giants in the earth in those days; and also after that, when the sons of God (Angels) came in unto the daughters of men, and they bare children to them, the same became mighty men which were of old, men of renown.

Chuck Missler

“Another reason that an understanding of Genesis 6 is so essential is that it also is a prerequisite to understanding (and anticipating) Satan's devices and, in particular, the specific delusions to come upon the whole earth as a major feature of end-time prophecy.”)

Genesis 2:7

God created your soul

Ezekiel 18:4

Behold,

every soul belongs to Me;

Ecclesiastes 12:7

and the spirit shall

return unto God who gave it.

Revelation 20:11-15

and he shall judge it

Not to mention:

2 Corinthians 5:1-8

Our bodies are like tents that we live in here on earth. But when these tents are destroyed, we know that God will give each of us a place to live. These homes will not be buildings someone has made, but they are in heaven and will last forever. While we are here on earth, we sigh because we want to live in that heavenly home. We want to put it on like clothes and not be naked.

These tents we now live in are like a heavy burden, and we groan. But we don't do this just because we want to leave these bodies that will die. It is because we want to change them for bodies that will never die. God is the one who makes all this possible. He has given us his Spirit to make us certain he will do it. So always be cheerful!

As long as we are in these bodies, we are away from the Lord. But we live by faith, not by what we see. We should be cheerful, because we would rather leave these bodies and be at home with the Lord.

Your alien?

Is my rebellious angel.

One owner of the life force

in this universe.

He's the only giver.

As you are all soon to find out.

Angels don't get to create life

and neither do we.

If you knew how close this all was?

You would have been in church years ago,

despite all of its problems.

I'll put it to you this away.

Im putting together a collection or 30 some odd artiles by a writer I happen to like, cosmology etc.

Start of the universe through all the time periods up till now.

Gonna organize it by epochs, titles etc, correlate it in a binder and have for myself.

540 pages or so.

"WHY?"

Because I do what the spirit tells me to.

Proofs is in the pudding, to many bonafides to even bring up at this point.

"YEAH BUT WHY WOULD THE SPIRIT TELL YOU TO DO THAT?"

For the same reason it told me to start taking notes at every sermon, meeting, gathering, bible study etc. and saving them.

"Yeah but why?"

Because it will make the starting over much easier.

KEEP LAUGHING.

THAY ALL LAUGHED AT ME 8 YEARS AGO

WHEN I WAS SAYING WHAT I WAS SAYING AND HOW DID THAT TURN OUT?

AINT ME OR GOT NOTHING TO DO WITH ME.

THE HOLY SPIRIT CHOOSE TO USE SOME PEOPLE.

WAVE YOUR WHITE FLAG OF SPIRITUAL SURRENDER, OR PERISH ETERNALLY.

YOUR CHOICE.

Checkmate BTW.

No comments:

Post a Comment